Predicting energy demand: A machine learning architecture

07 June, 2021 - 7 min read

Machine Learning (ML) in the age of cloud computing has become ubiquitous. Many of the ML resources on the net go over the concepts of training, or theory behind the algorithms. This is vital if you plan to create a succesfull ML model.

However, as engineers it is also crucial to put the results generated by our machine learning model at the hands of our users. For that we need a scalable and reliable architecture that can be reused across many products. This post will go over a sample custom architecture that might fit your needs.

The requirement

The purpose of this architecture is to create a product that enables the Operation and Maintenance (O&M) personnel of a manufacturing plant to know how the energy demand of a chilled water HVAC system will behave in the near future (~ 15 days). This will allow the team to plan operational changes that can result in energy savings.

After a brainstorming session with the stakeholders, the following requirements needed to be met by the architecture to fulfill the MVP:

- Predict the energy demand of a chilled water HVAC system at any point in the near future (15 days).

-

Present the predicted data to two types of stakeholders:

- Business stakeholders need to access the data in a user friendly format, i.e. Excel or Microsoft Power BI, to make decisions based on the predicted energy cost.

- Technical stakeholders need to integrate the prediction to their proprietary systems, i.e. Building Management Systems (BMS), to make operational changes that can result in energy savings.

- Have the ability to improve the ML model as the independent variables on which the model was trained evolve through time.

- Have the ability to extend the architecture to predict other O&M related data points as the product grows.

The architecture

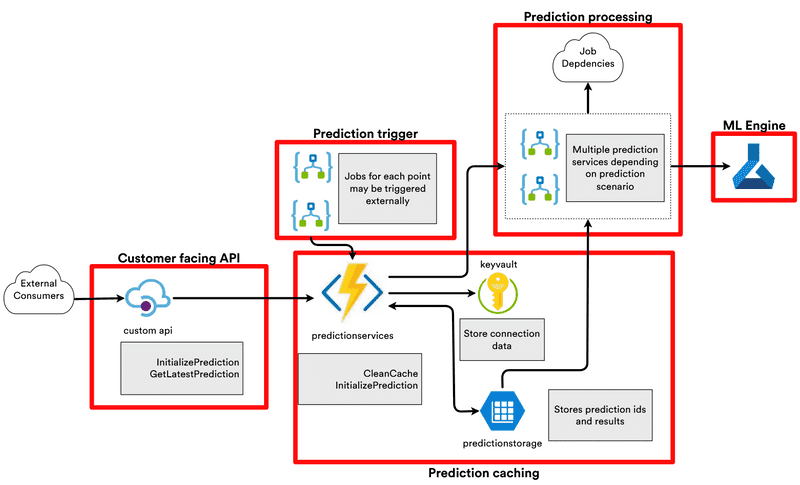

This is an overview of the proposed architecture. It will be hosted entirely in Azure. I will go over each of its components and their role, as well as give a brief description of why each of the Azure resources used was chosen.

The components

ML Engine

Azure ML Studio allows us to expose an API that will be consumed by the prediction workflows, and also enables to retrain and redeploy the model when needed. Therefore, it is possible to iterate and improve the prediction as the independent variables (weather data and space occupation) change over time. This helps to meet requirements (1) and (3).

Prediction trigger

For this purpose, an Azure Logic App will be used for every data point. If the business rules to trigger the prediction are updated (or new ones are required), a new Logic App can be created very quickly through the Azure Portal. This helps to meet requirement (4).

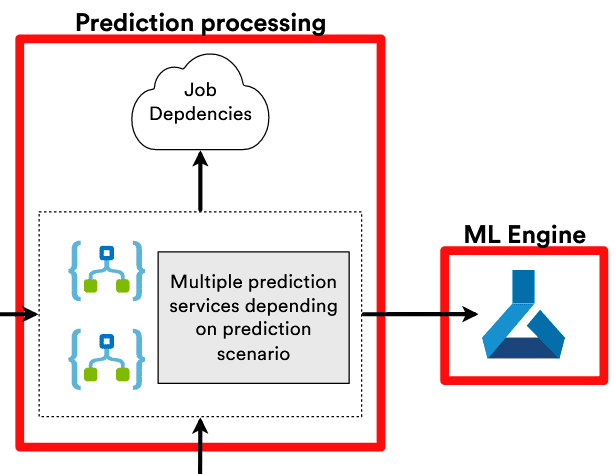

Prediction processing

As more data point predictions are added to the product, we can add their corresponding triggers and prediction workflows as a new Logic App, meeting requirement (4).

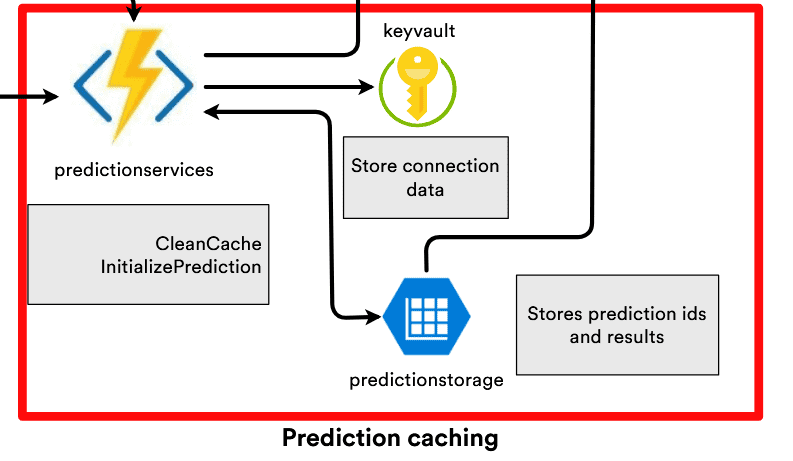

Prediction caching

The purpose behind having a caching component is multiple. It will allow to limit the number of calls to our ML API, its dependent services and to minimize the number of times that the Azure Logic Apps run.

Using an Azure Function alongside with Azure Storage Tables to persist the cached data helps to further decrease the cost while keeping the entire infrastructure functional and ready to respond to new requests instantaneously. Therefore, the most complex parts of the architecture only run when needed and not every time an external consumer requests the result of a prediction.

Another resource that is part of the prediction caching is the Azure Key Vault. Using a key vault to store secrets and configuration values has a lot of advantages that will be covered in future posts.

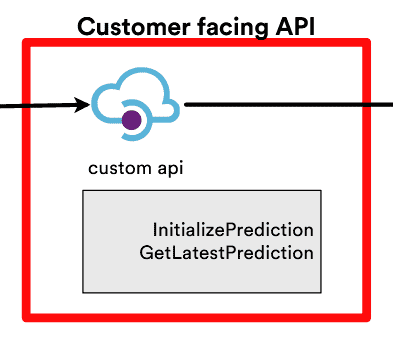

Customer facing API

This can be achieved through the use of Azure API Management, which allows to bundle APIs into products and set up policies to our stakeholders such as usage quotas and user management.

The API can be consumed by most data manipulation software such as Excel or Power BI. Because it is also exposed as an HTTP endpoint, a request can be implemented on any webpage or called from within a proprietary BMS system, fulfilling requirement (2).

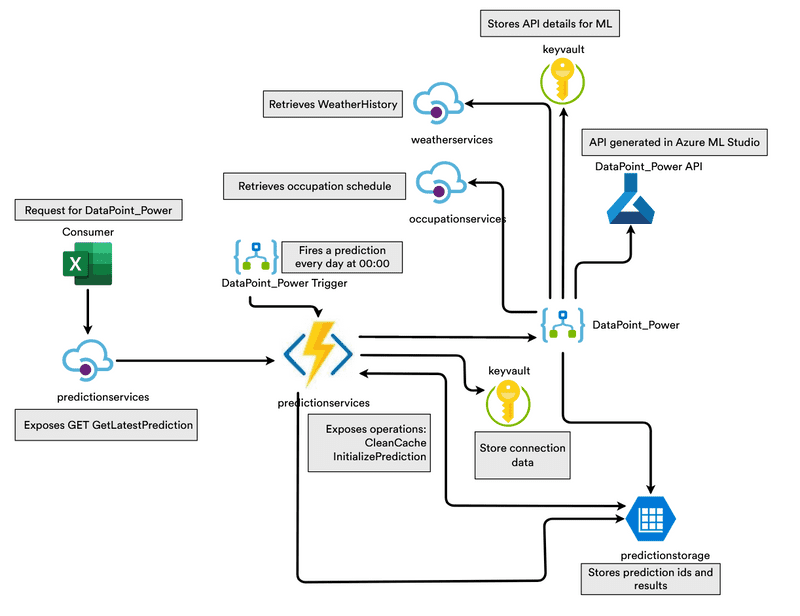

The implementation

The resulting implementation of the architecture is depicted in the following diagram. Since the energy demand for the chilled water HVAC system depends on both the weather forecast data and the occupation schedule of the different meeting rooms, the APIs that expose this data need to be queried by the Azure Logic App that controlls the prediction processing for the data point.

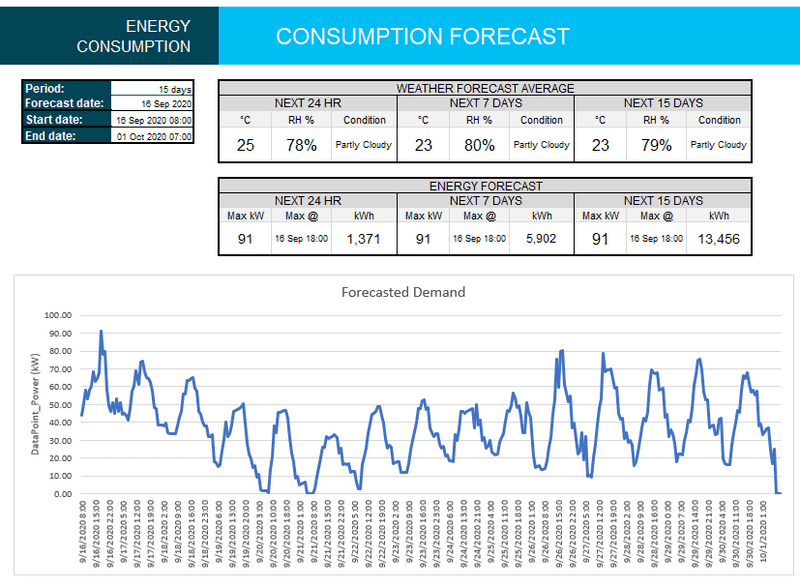

For this specific implementation, a report was generated in Excel where the API endpoint GET GetLatestPrediction was called through VBA code and where the specific API key on our API product for the end user was passed as a Header. This the generated report for one of the test runs:

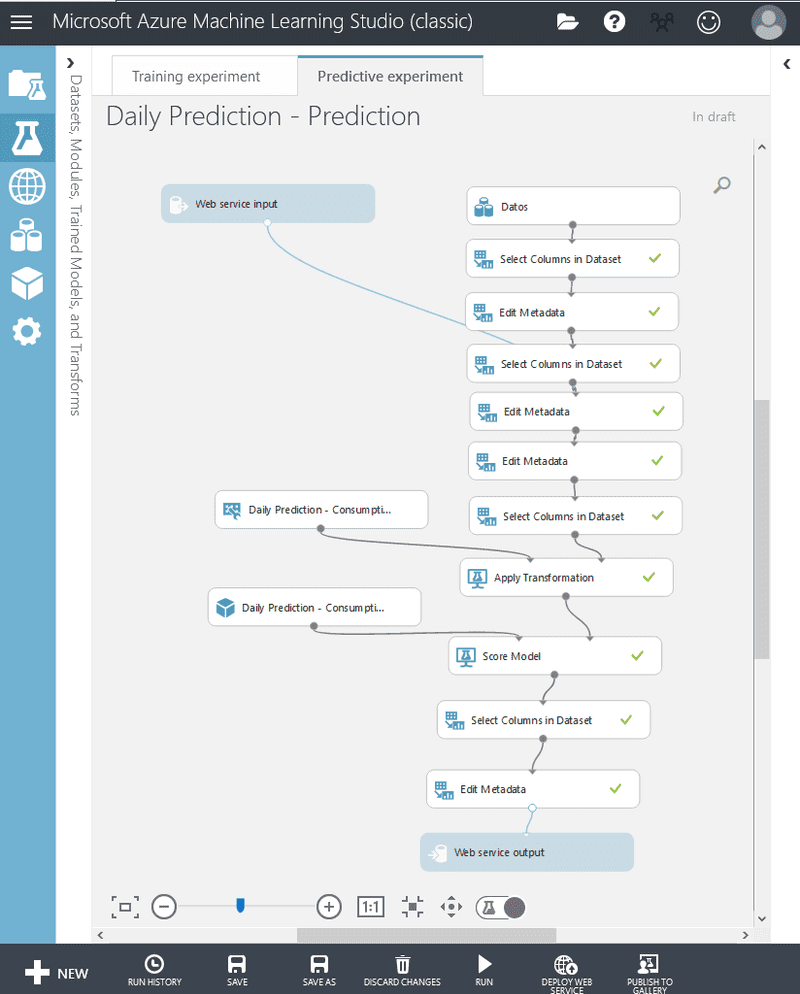

In case you wonder how the Azure ML training interface looks like, it is pretty simple (although the training process itself was fairly time consuming).

Final thoughts and next steps

Some of the next steps that are worth exploring are how the architecture can accomodate a retraining scenario. Currently the retraining would need to be done manually, however we could integrate this as part of a regular workflow since Azure ML Studio exposes an API for this purpose. This could also imply some considerations such as API versioning in case that we introduced breaking changes and needed to support older consumers.

Sadly enough, although this architecture did come to life as a proof of concept, it was never released as a full product due to technical limitations on the stakeholder side having to do with a lack of monitoring of the energy demand to continue moving forward with the ML model improvement.

About the resources

If you wish to learn more about the Azure resources used in this architecture, feel free to dive into their documentation: